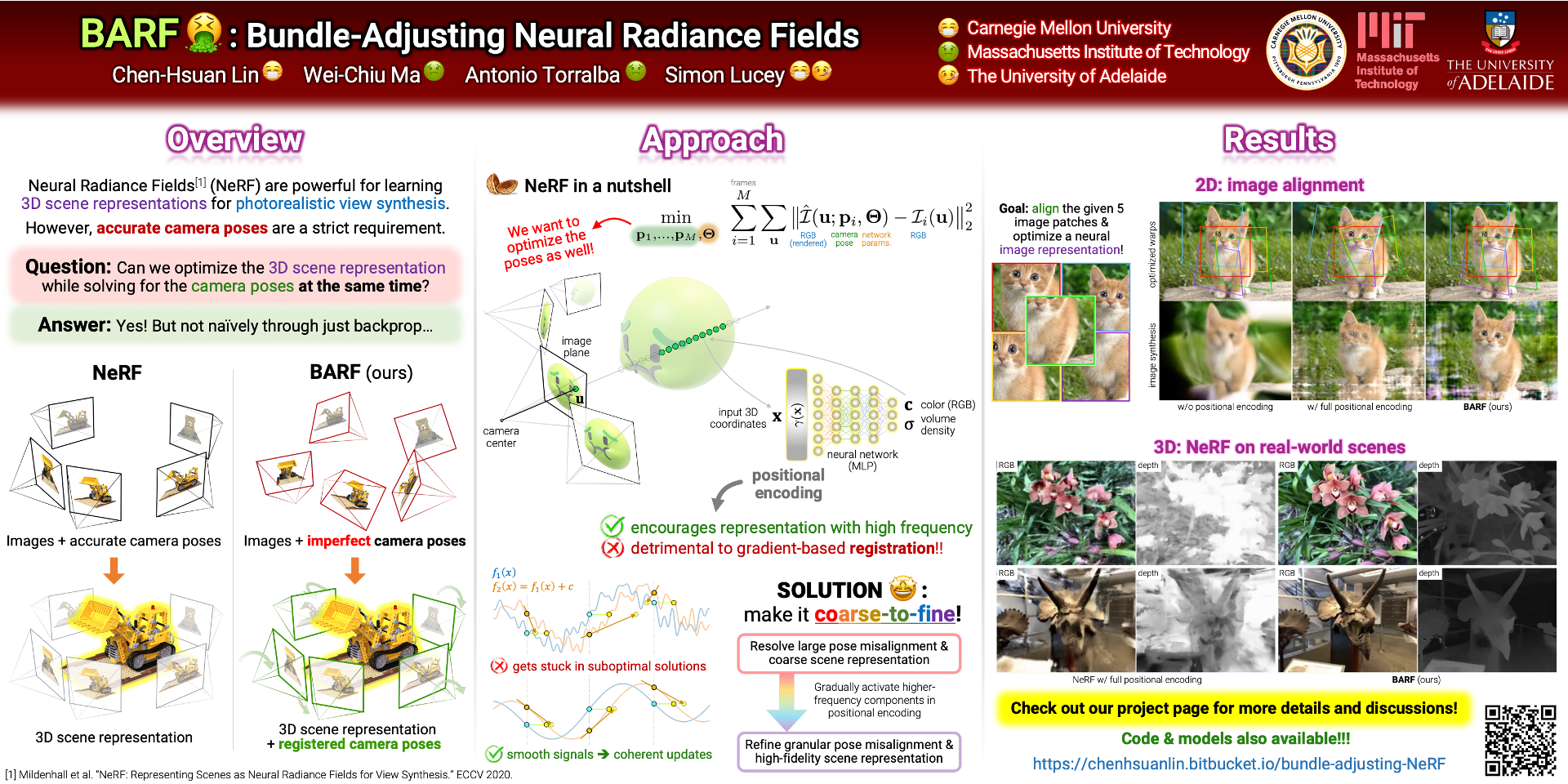

Neural Radiance Fields (NeRF) have recently gained a surge of interest within the computer vision community for its power to synthesize photorealistic novel views of real-world scenes. One limitation of NeRF, however, is its requirement of accurate camera poses to learn the scene representations. In this paper, we propose Bundle-Adjusting Neural Radiance Fields (BARF) for training NeRF from imperfect (or even unknown) camera poses — the joint problem of learning neural 3D representations and registering camera frames. We establish a theoretical connection to classical image alignment and show that coarse-to-fine registration is also applicable to NeRF. Furthermore, we show that naively applying positional encoding in NeRF has a negative impact on registration with a synthesis-based objective. Experiments on synthetic and real-world data show that BARF can effectively optimize the neural scene representations and resolve large camera pose misalignment at the same time. This enables view synthesis and localization of video sequences from unknown camera poses, opening up new avenues for visual localization systems (e.g. SLAM) and potential applications for dense 3D mapping and reconstruction.

(videos are best viewed with Google Chrome on a desktop/laptop)

Abstract

Abstract

Image alignment (2D)

Image alignment (2D)

Given 5 image patches of a tabby cat (below), can a neural network learn to align them while also fitting them to a high-quality coordinate-based neural image representation?

| w/o positional encoding | w/ full positional encoding | BARF (ours) |

We can also visualize how positional encoding affects the basin of attraction, where we attempt to warp a center patch to a target patch cropped by a certain offset.

We repeat the same procedure for every target offset possible.

Compared to the baselines, BARF effectively widens the basin of attraction with much lower image reconstruction error.

Please see the supplementary document for a more detailed explanation of the experiment.

(white contour: threshold at a translational error of 0.5 pixels)

| BARF (ours) | w/ full positional encoding | w/o positional encoding |

| target warp | optimized warp | warp error | image recon. error |

NeRF (3D): synthetic objects

NeRF (3D): synthetic objects

Given multi-view observations of a synthetic object but with imperfect camera poses, we visualize how BARF effectively corrects the camera poses and optimize for the 3D scene representation at the same time, whereas the baseline NeRF (blindly allowing for variable poses) falls into suboptimal solutions. (optimized vs. ground truth)

| NeRF w/ full positional encoding | BARF (ours) |

We compare our novel view synthesis results below. From imperfect camera poses, BARF optimizes for high-fidelity 3D scene representations with less artifacts, even retaining the ability of modeling view-dependent appearance.

| image | depth | image | depth | image | depth |

| NeRF w/o positional encoding | NeRF w/ full positional encoding | BARF (ours) |

NeRF (3D): real-world scenes

NeRF (3D): real-world scenes

We demonstrate how BARF can learn 3D scene representations from RGB video sequences from unknown camera poses.

We compare the view synthesis results and the camera poses over the course of optimization.

Naively applying positional encoding in NeRF is detrimental to the registration process, which can get stuck in suboptimal solutions easily.

BARF can optimize for poses that highly agree with the structure-from-motion solutions.

2nd row: optimized camera poses (![]() )

)

3rd row: optimized camera poses (![]() ) aligned to poses from COLMAP (

) aligned to poses from COLMAP (![]() ) via Procrustes analysis

) via Procrustes analysis

| image | depth | image | depth |

| NeRF w/ full positional encoding | BARF (ours) |

We compare our novel view synthesis results below (with circular pose perturbations around a centered training pose). BARF can optimize for much higher-quality scene representations from unknown camera poses.

| image | depth | image | depth |

| NeRF w/ full positional encoding | BARF (ours) |

Presentation

Presentation

Code

Code

Details to download the datasets are also described in the GitHub page.

Publications

Publications

ICCV 2021 paper: https://arxiv.org/abs/2104.06405

BibTex:

title={BARF: Bundle-Adjusting Neural Radiance Fields},

author={Lin, Chen-Hsuan and Ma, Wei-Chiu and Torralba, Antonio and Lucey, Simon},

booktitle={IEEE International Conference on Computer Vision ({ICCV})},

year={2021}

}

Poster

Poster

Acknowledgements

Acknowledgements

We thank Chaoyang Wang, Mengtian Li, Yen-Chen Lin, Tongzhou Wang, Sivabalan Manivasagam, and Shenlong Wang for helpful discussions and feedback on the paper. This work was supported by the CMU Argo AI Center for Autonomous Vehicle Research.