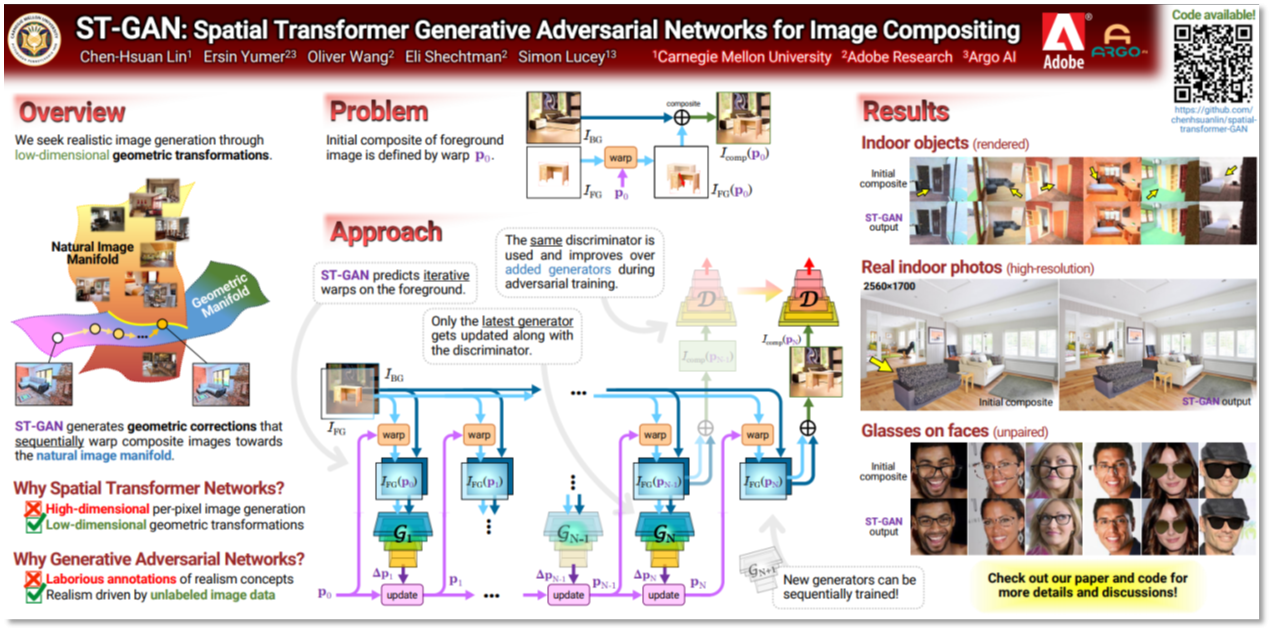

We address the problem of finding realistic geometric corrections to a foreground object such that it appears natural when composited into a background image. To achieve this, we propose a novel Generative Adversarial Network (GAN) architecture that utilizes Spatial Transformer Networks (STNs) as the generator, which we call Spatial Transformer GANs (ST-GANs). ST-GANs seek image realism by operating in the geometric warp parameter space. In particular, we exploit an iterative STN warping scheme and propose a sequential training strategy that achieves better results compared to naive training of a single generator. One of the key advantages of ST-GAN is its applicability to high-resolution images indirectly since the predicted warp parameters are transferable between reference frames. We demonstrate our approach in two applications: (1) visualizing how indoor furniture (e.g. from product images) might be perceived in a room, (2) hallucinating how accessories like glasses would look when matched with real portraits.

Abstract

Abstract

Video

Video

Code, dataset, and pretrained models

Code, dataset, and pretrained models

The code is hosted on GitHub (TensorFlow).

The rendered indoor object dataset can be downloaded here.

The pretrained models can be downloaded here (indoor objects) and here (glasses).

The rendered indoor object dataset can be downloaded here.

The pretrained models can be downloaded here (indoor objects) and here (glasses).

Publications

Publications

CVPR 2018 paper: [ link ]

Supplementary material: [ link ]

arXiv preprint: https://arxiv.org/abs/1803.01837

BibTex:

@inproceedings{lin2018stgan,

title={ST-GAN: Spatial Transformer Generative Adversarial Networks for Image Compositing},

author={Lin, Chen-Hsuan and Yumer, Ersin and Wang, Oliver and Shechtman, Eli and Lucey, Simon},

booktitle={IEEE Conference on Computer Vision and Pattern Recognition ({CVPR})},

year={2018}

}

title={ST-GAN: Spatial Transformer Generative Adversarial Networks for Image Compositing},

author={Lin, Chen-Hsuan and Yumer, Ersin and Wang, Oliver and Shechtman, Eli and Lucey, Simon},

booktitle={IEEE Conference on Computer Vision and Pattern Recognition ({CVPR})},

year={2018}

}